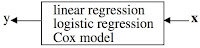

Anaylsis in the data modeling community, he argues, begins "...with assuming a stochastic data model for the inside of the black box" (199):

The algorithmic modeling community, however, "...considers the inside of the box complex and unknown. Their approach is to find a function...--an algorithm that operates on x to predict the responses of y" (199):

The overarching message of Breiman's article is an important one that is still relevant today, eight years later. An excerpt summarizes it quite well:

My biostatistician friends tell me, "Doctors can interpret logistic regression." There is no way they can interpret a black box containing fifty trees hooked together. In a choice between accuracy and interpretability, they'll go for interpretability. Framing the question as the choice between accuracy and interpretability is an incorrect interpretation of what the goal of a statistical analysis is. The point of a model is to get useful information about the relation between the response and predictor variables (209-210).

He continues, by pointing out that a model doesn't necessarily have to be simple in order for it to provide useful insight. This point struck me, as, on the surface it seems to defy a central principle of model selection (e.g., MDL, AIC, BIC). Granted, Breiman is referring to a more general type of model selection--data modeling v. algorithmic modeling--but the contrast is still interesting. More importantly, Breiman was exactly right in pointing out the strange statistical reasoning the pervades many biomedical fields--do we want answers to our questions, or do we want to the layperson to understand every step involved in arriving at the answers?

No comments:

Post a Comment